I’m moving my blog to https://qualitybits.tech – check it out for more content.

There are some words in the Quality Analysis field that I grew to dislike: let me start with perfect. Only learning more and growing with time in my career, I realised that we may need to be a bit more careful about how we talk about our field and what are the exact expectations. If we do not, we may be stagnating in siloed tribes of QA departments full of grudge and pain of not getting the perfect software.

Perfect software does not exist – bugs do not mean that quality is bad. What matters is how you deal with bugs, mistakes, and proceed to work.

Even in 2019, at some quality focused (and not only) conferences, I occasionally hear QAs speak how they got into the field because they are perfectionists & they LOVE perfect software. Then I silently cringe, and, actually, feel a bit sad for that – I’ve been there. I was hurt when my reported bugs were not being fixed, that nobody seemed to get me and appreciate my work. However, this often goes hand in hand on how organisation is built, and, what working ways there are implemented.

When all roles collaborate, there is more empathy, more responsibility as well for any kind of an issue that gets noticed. Bugs are not a sign of bad quality, bugs are… inevitable. When we accept that there is no such thing as perfect software, but only software that has no known bugs, for example, when a bug does pop up – we can treat it as a learning opportunity. Work processes like zero-bug policy can help us with that.

In my team – the backlog has no bugs: not because there are none, but because right now we are not aware of any. Zero bug policy for us does not mean that we have zero bugs at all times, it means that once we do discover the bug – it gains the highest priority and gets tackled as soon as possible. Zero bugs are not tackled. We grab the opportunity to learn immediately. An important part here is that we are saying tackled, not necessarily fixed – some may end up being “fixed” as expected, but the decision is always made (now I just remembered that at the start of my career in my bug reports I’d be asked to add what was the expected result, and, that in my current team we do not have that in the reports – we discuss it using other ceremonies & collaboration instead of me telling what should work how).

And, this leads me to one of my main learnings I had:

Some bugs are really not that important: Value of the product may outweigh the bug-free product.

We have to make trade-offs. Sometimes a pixel shift of the UI means nothing even if for us it looks ugly (how many times I fought for bugs I thought were disastrous!). However, it all depends on the product – maybe for a UI-focused product it’s a deal-breaker, but for a different product – it’s nothing.

What changed my career was getting exposure to analytics, monitoring, metrics, logs. Understanding what actually is important to the user is eye-opening. We may think that as QAs we represent the customers, but we may be surprised! Also, having the analytics we can quantify the value of bugs (my article on Sticky Minds about monitoring goes to a bigger depth).

We all do mistakes. High-quality software means that we can recover faster from mistakes, and, are able to handle failure gracefully.

Netflix has shown a great example of failing on purpose with its idea of a Simian Army. And, to quote Cory Bennett & Ariel Tseitlin who said about that:

“We have found that the best defense against major unexpected failures is to fail often.”

Forget perfection – strive for continuous improvement with learning from mistakes, and consistently working on good ways of working with healthy practices adding up on a high-quality product.

P. S. Two books that popped into my head while doing this write-up and I could definitely recommend reading are Perfect Software: And Other Illusions about Testing by Gerald Weinberg, and Accelerate by Gene Kim, Jez Humble, and Nicole Forsgren. The first one touches on the perfection aspect quite a bit on a high-level, while the second one talks more about high-performing teams and how to measure success better.

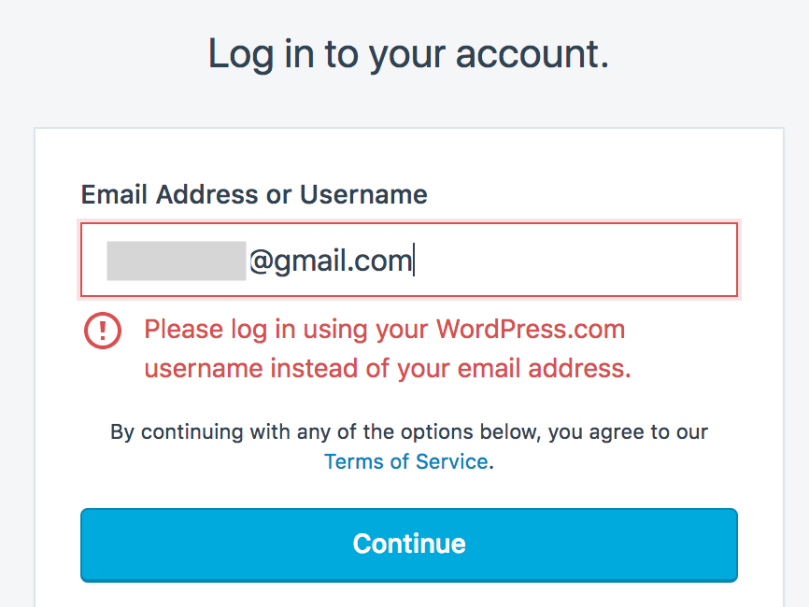

Why would the field say “Email Address or Username” when only username is allowed? I used the correct e-mail and managed then to send a link to the very same e-mail and login via click there (as I could not guess the username field). This just sums up on how you should always think twice about the design: how users will interact with your product and feel afterwards.

Why would the field say “Email Address or Username” when only username is allowed? I used the correct e-mail and managed then to send a link to the very same e-mail and login via click there (as I could not guess the username field). This just sums up on how you should always think twice about the design: how users will interact with your product and feel afterwards.